EHoI: A Benchmark for Task-oriented Hand-Object Action Recognition Via Event Vision

Abstract

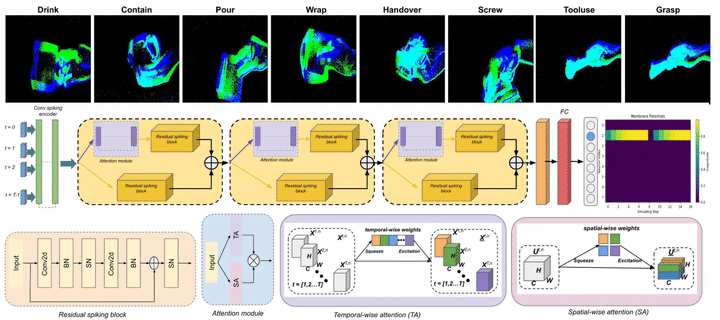

The event-based camera is a novel neuromorphic vision sensor that can perceive different dynamic behaviors due to its low latency, asynchronous data stream, and high dynamic range characteristics. There has been much work based on event cameras to solve problems such as object tracking, visual odometry, and gesture recognition. However, the adoption of event vision to analyze hand-object action in a dynamic environment, a problem that regular CMOS cameras cannot handle, is still lacking in relevant research. This work presents a richly annotated task-oriented hand-object action dataset consisting of asynchronous event streams, captured by the event-based camera system on different application scenarios. In addition, we design an attention-based residual spiking neural network (ARSNN) by learning temporal-wise and spatial-wise attention simultaneously and introducing a particular residual connection structure to achieve dynamic hand-object action recognition. Extensive experiments are validated by comparing with existing baseline methods to form a vision benchmark. We also show that the learned recognition model can be transferred to classify a real robot hand-object action.